The Natural Selection of Bad Vibes (Part 2: Social Media)

Why social media makes us sad.

TLDR: The vibes are bad, even though—on most ways we can measure—things are (comparatively) good. The last post showed how disproportionately-negative sharing can emerge from trying to solve problems. This post will show how (1) this leads reasonable people to be overly pessimistic, and (2) how adding social media makes it all much, much worse.

In the last post, we saw that there are lots of good data and bad vibes.

People are more pessimistic than they have been in a long time. And, of course, they’re partially right: there are many terrible things happening in the world right now.

But there have been terrible things happening in most places for most of history. And indeed, many chart-wielding optimists have been telling us for some time that things are better than they’ve ever been.

Maybe the most (in)famous chart is from Gapminder, comparing life expectancy, (real) GDP per capita, and population size across the world from (say) 1900 to 2022:

Strikingly, the lowest life-expectancy in 2022 is as high as the highest in 1900.

In the last post, I described a simple model that might help explain why people are pessimistic even when things are going well. If people’s goal in conversations is to solve problems, then they’ll be more likely to talk about problems than positives.

Recall this chart: even in a world where 90% of news is good, as the number of items to choose from grows, the proportion of positive conversation topics will be much lower than 90%:

In this post, I’d like to do two things:

First, I want to incorporate people’s beliefs into the model. If people are aware the fact that conversations tend to be about negative topics, will they be able to correct for that and avoid becoming overly pessimistic? (Answer: no.)

Second, what happens to these dynamics when we embed them within a social-media network? (Answer: bad things.)

It’s widely thought that social media makes people more negative. Indeed, there’s empirical evidence that people—especially those who are politically active—tend to repost negatively-valenced content more than positively-valenced content:

My model will explain this—and also vindicate the intuition that social media makes people’s pessimism much worse.

Will negative sharing lead to negative beliefs?

In the model from last time, we used the proportion of news-items that were positive as a measure of how good the world was—using a 90%-positive world for illustration. The proportion of conversations about positive topics was far below 90%.

But what we want to explain is not merely negative conversations, but pessimistic beliefs or estimates about how good the world is. After all, people genuinely tend to think that things are bad—and, at least on some topics, far worse than they are. Recall that people’s estimates for the proportion of people who self-report being happy (20–60%) is far below the true value (70–95%):

Can our model predict this? So far, we’ve only modeled the proportion of conversations that are positive or negative—not what reasonable people will infer about how good the world is from this fact.

Of course, if people naively assume that conversation-topics are representative samples of the news, then they will be overly pessimistic. But people don’t do that—they know that conversation-topics are selectively chosen. People rarely talk about brushing their teeth; but no one infers from this that people rarely brush their teeth! (I hope.)

Our question: what happens in our model if people are Bayesians who understand that people are more likely to talk about problems than positives?

Of course, if Bayesians have accurate priors about the setup and receive unambiguous evidence, they’ll converge to the truth in the long run. But in the short run—especially if they mis-estimate the strengths of various effects—they can easily be led astray.

For example, here’s what happens to Bayesians who have an accurate causal model about how people decide what topics to share (that they take expected-values of benefits and use softmax) and have accurate prior estimates about every parameter in our model, except that they over-estimate how random people are in their choices:1

The orange line—representing the proportion of positives shared—is taken from the above model, assuming problems are 30% more likely to lead to benefits than positives.

The green line is the Bayesian’s estimate for what proportion of items are positives, upon seeing the proportion of positives people share. (The bars are their 80%-credible intervals, i.e. the range they’re 80%-sure the true value is within.) So with 20+ topics to choose from, the Bayesian estimates that around 65–70% of news is positive—substantially (but not radically) below the true value of 90%.

Upshot: Even people who realize that conversation-topics aren’t representative will still be led to (some) excess pessimism by just how negative conversations tend to be.

What does social media do?

What happens when we embed all this in a social network? Things get worse.

We already know that as the number of topics increases, people will get more negative. But social media does this in a particular way: the extra topics it provides aren’t randomly selected—they have already been filtered through the sharing process we’ve described, meaning they’re already skewed toward negativity.

So the dynamics ramify. With each round of sharing, people have an increasing proportion of problems to choose from—and since those problems were selected as the most worth-sharing (e.g. the most politically-urgent disaster), there’s even more reason to re-share those problems. We get a runaway cycle of negativity.

How can we model this?

First, let’s build a simple social network. We have some number of people—say 500. They are connected in a network of “friends”—people who can see what they share. For simplicity, I’ll assume this is a symmetric Bernoulli graph: each pair of people has a 1% chance to be connected—for example:

How does sharing work? Each agent starts out with an initial number of (say, 10) topics, randomly chosen from a large pool. 90% of the large pool are positives, since they’re randomly selected from the (we’re supposing, great) world.

In each round, they choose a single topic to share with their friends using the same method as before—they calculate the expected benefit of sharing each topic, and then choose via softmax.

After sharing, each person updates their list of available topics by adding any topic that one of their friends shared which they didn’t already know about. These topics are now things they can choose to share in later rounds. (If your friend tells you about a new ice cream place, or a new political disaster, you might decide to post about it tomorrow.)

The process repeats for (say) 10 rounds. What happens?

The proportion of positives goes to the floor. For example, averaging over 20 different simulation runs, here’s what happens if everyone starts of with 10 topics individually, and problems are 30% more likely than positives to yield a benefit if shared:

After 5 rounds, the proportion of positives shares hovers around 10%—despite 90% of news items being positives.

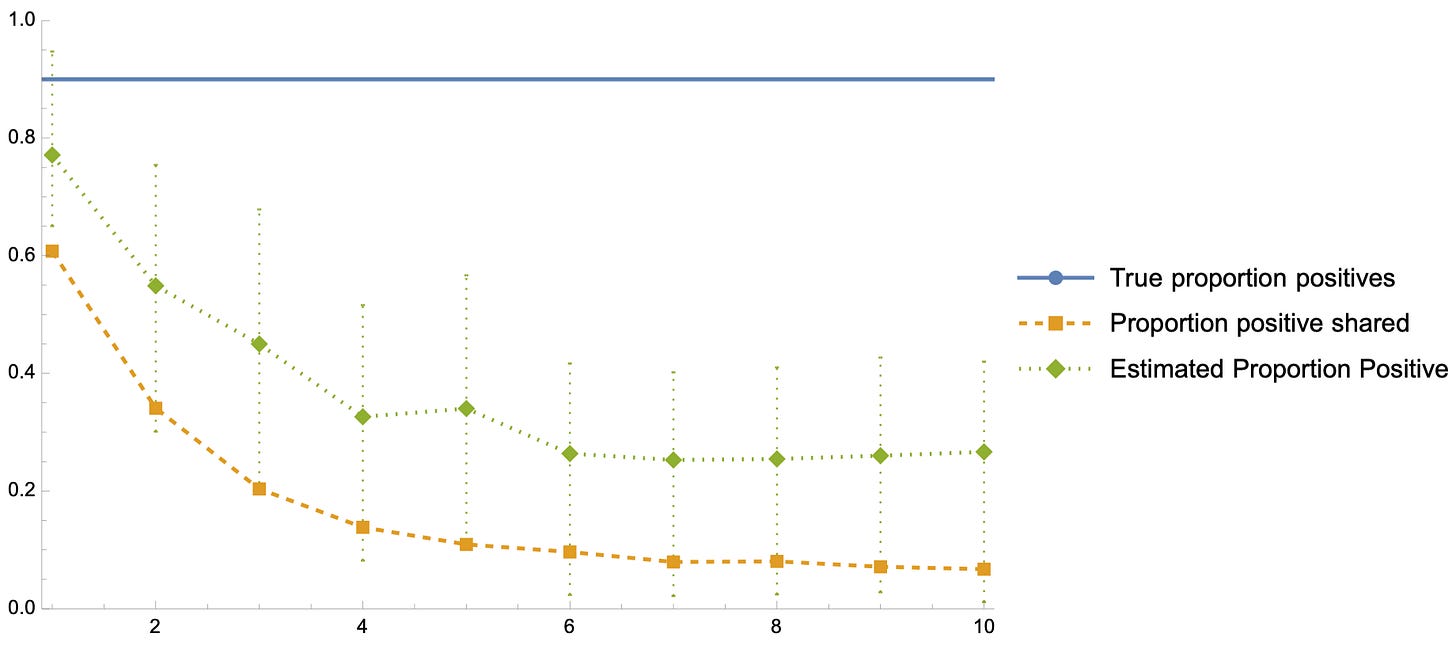

This leads to stark overestimates of how bad things are. Here are the same Bayesian agents2, when they condition on the proportion of positives they see shared in their social network across rounds:

Upshot: once embedded in a social network our Bayesians confidently estimate that around 30% of news is positive—when in fact 90% is.

What to make of this?

Conversational and social-media dynamics induce a strong biasing effect toward negative content—even when each individual is just trying their best to be helpful. Given how strong these effects are, even sophisticated people will be led into excess pessimism.

This is a simplistic model. But I think it captures a core dynamic that’s often overlooked or underestimated: there are perfectly sensible reasons why public discourse would tend to be so negative, and perfectly reasonable responses to this fact will lead to excess pessimism.

No wonder the vibes are bad. Maybe things aren’t as bad as they seem?

Precisely: they think that people use a softmax precision of 3, rather than 10, in deciding which topic to share.

I’m assuming that our Bayesians can’t track the ramifying effects of social network sharing. Of course, ideal Bayesians would—but doing so would be intractably complex. I’m in no position to model it properly with my computer, so it’s certainly is not something real people can be expected to know how to integrate.

Could part of the problem be the size of the problems? If we are thinking about things 100 years ago, they were probably much smaller scale. A local problem in your town or community might be bad but you can realistically go about fixing it. The local church has a hole in the roof, you pass around a collection and local handymen fix it. There is a loud pub that wakes people up every night, that could be have quiet hours enforced. Problems now might be much bigger; global warming, Russian nukes, the EU breaking encryption across a whole continent. While it's easy to organise and change something locally, lager problems (or problems in another part of the world which we now know about) are difficult, if not impossible for people to solve. This could give more of a sense of helplessness and hopelessness.

Great post. My one critique is this quote:

"Upshot: Even people who realize that conversation-topics aren’t representative will still be led to (some) excess pessimism by just how negative conversations tend to be."

The implication as I read it is that teaching people about the over-representativeness of negative narratives is a fool's errand, since even Bayesians will still have an overly-negative view of the world.

My response is that we know focusing on the negative has an evolutionary basis and some amount of excess pessimism is good. The ideal (not most accurate) estimate of positive news is likely lower than the true value of 90%. While it's true that even Bayesians are still overly-pessimistic, it could be that their prior knowledge is the antidote needed to combat the worst effects of extreme pessimism caused by social media. Beyond a certain point of pessimism, the solution changes from "let's work on the bad" to "let's get rid of the system and start over". The rise of populism and illiberalism can be thought of as a function of the growth of this second type of thinking. Teaching people about the over-representativeness of negativity is the key to returning people to a "normal" amount of pessimism.