Why Rationalize? Look and See.

2400 words; 10 minute read.

I bet you’re underestimating yourself.

Humor me with a simple exercise. When I say so, close your eyes, turn around, and flicker them open for just a fraction of the second. Note the two most important objects you see, along with their relative positions.

Ready? Go.

I bet you succeeded. Why is that interesting? Because the “simple” exercise you just performed requires solving a maddeningly difficult computational problem. And the fact that you solved it bears on the question of how rational the human mind is.

As I’ve said before, the point of this blog is to scrutinize the irrationalist narratives that dominate modern psychology. These narratives tell us that people’s core reasoning methods consist of a collection of simple heuristics that lead to mistakes that are systematic, pervasive, important, and silly—that is, in principle easily correctable. (Again, see authors like Fine 2005, Ariely 2008, Kahneman 2011, Tetlock and Gardner 2016, Thaler 2016, and many others.)

Much of this project will involve scrutinizing particular irrationalist interpretations of particular empirical findings in order to offer rational alternatives to them. But it’s worth also spending time on a bigger-picture question: How open should we be to those rational alternatives? When we face conflicting rational and irrational explanations of some empirical phenomenon, which should we be more inclined to accept?

Given the dominance of the irrationalist research program in psychology, you might incline strongly toward the irrationalist explanation. Many do. The point of this post (and others to follow) is to question that inclination––to suggest that rational alternatives have more going for them than you might have thought.

I should say now that this argument doesn’t claim to be decisive––there are plenty of ways to resist. But there are also ways to resist that resistance, and to resist the resistance to that resistance to that resistance, and… …and of course none of us have time for that in a blog post. Instead, I just want to get the basic argument on the table.

(2000 words left)

Two Systems

I’m going to make this argument by contrasting the scientific literature on two different cognitive systems. No, not Kahneman’s famous “System 1” and “System 2”. Rather, two much more familiar aspects of your cognitive life: what you see, and what you believe—as I’ll call them, your “visual system” and your “judgment system”. (I have no claim to expertise on either of these literatures, but I do think my rough characterization is accurate enough—if you think otherwise, please tell me!)

Start with your visual system. According to our best science, what is the purpose of your visual system, the problem it faces, and the solution it offers?

The Purpose: To help you navigate the world. More precisely, to (very) quickly build an accurate 3D map of your spatial surroundings so that you can effectively respond to a dynamic environment

The Problem: Recovering a 3D map of your surroundings based on a 2D projection of light onto your retinas is what’s sometimes called an “ill-posed problem”––there is no unique solution. For example, suppose (rather surprisingly) upon doing my exercise you saw a scene like this:

Where would you place the relative positions of the wine glasses? Equally close, no doubt. (And you’d be correct.) But strictly speaking that is underdetermined by the picture––you’d see the exact same pattern of light if instead of two normal-sized wine glasses equally close, one of them were bigger and further away. (In more complex scenes, more complex alternatives would do the same trick.)

In other words, one of the core problems your visual system faces is that you cannot deduce with certainty the correct 3D map of your surroundings from the 2D projection of them onto your eyes. And yet––somehow––you do manage to come to the right conclusions, virtually every waking minute.

How do you do it?

The Solution: The arguably-most-successful models of how you manage to do it fall within the class of Bayesian “ideal observer” models. These models ask, “What would an ideally rational agent who optimally used incoming information conclude about the visual scene?” These models use literally the same formalism as the standard model of rational belief that is so maligned by psychologists working on the judgment system––more on that in a moment.

Regardless of the details, one thing that is certain is that your visual system uses incredibly sophisticated computations to solve this problem––ones that easily avoid the mistakes that the most sophisticated computer vision systems still routinely make. (And they still make quite laughable mistakes––here’s how a state-of-the-art computer vision program classified the following picture:

A large cake with a bunch of birds on it . pic.twitter.com/AEMfDDL8mC

— INTERESTING.JPG (@INTERESTING_JPG) July 2, 2015

For other funny examples, see this Twitter feed or page 16 of this paper.)

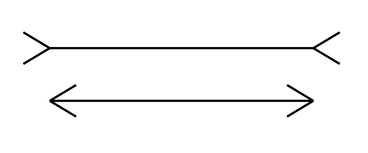

The Narrative: In short, our best science tells us that to solve the ill-posed but all-important problem faced by our visual system, our brains have evolved to implement (approximations of) the rationally optimal response to incoming information. Of course, sometimes these mechanisms lead to systematic errors––as in the famous Muller-Lyer illusion, wherein lines of the same length appear to differ due to the angles attached to them:

But––by the near-optimal design of the system-–these mistakes are necessarily either rare or unimportant in everyday life. (Sure enough, the Muller-Lyer effect leads to accurate perceptions of length in normal scenarios.)

The punchline? The human visual system is an amazingly rational inference machine.

Now turn to your judgment system. According to our best science, what is the purpose of your judgment system, the problem it faces, and the solution it offers?

The Purpose: To help you navigate the world. More precisely, to build an (abstract) map of the way the world is so that you can use that information to help guide your actions. (This is a pure “functionalist” picture of judgment/belief. One way to resist is to argue that belief has important social/reputational/motivational roles––see e.g. Jake Quilty-Dunn’s new paper on this.)

The Problem: Recovering an accurate judgment about the world based on limited evidence is an “ill-posed problem” in exactly the same sense that recovering a 3D map from a 2D retinal projection is––there is no unique solution.

Take a mundane example: given your limited evidence about me, it’s impossible for you to determine with certainty whether I will walk 3 miles today. (All your evidence could be the same, and I could decide right now to go do so.) Nevertheless, I suspect you’re quite confident that I won’t. And, again, you are correct. But again, that fact was underdetermined by your evidence.

The Solution: The most popular models of how people manage to make judgments in an uncertain world is that they use a variety of useful but simple “heuristics”. These rules of thumb are so simple that they lead to systematic and severe mistakes, resulting in massive levels of overconfidence. (See my post on overconfidence for a critique of that final claim.)

By simple, I mean simple. These are heuristics like: forming your belief about whether I’ll walk 3 miles today based on a single piece of evidence, or based on whether such an activity is “representative” or”prototypical” of what you know about me; or picking a near-arbitrary estimate of how much I walk on a given day as an “anchor”, and then expanding it (insufficiently) to settle on your guess of how far I’ll walk today.

Regardless of the details, one thing we are assured is that the judgment system uses incredibly unsophisticated mechanisms to solve this problem––ones that often make mistakes that even the simplest rational models would avoid (like this one and this one).

The Narrative: In short, our best science tells us that to solve the ill-posed but all-important problem of forming judgments under uncertainty, our brains have evolved to implement simple heuristics that lead to systematic, pervasive, important, and silly errors. Of course, these heuristics often lead to accurate judgments; but––by the poor design of the system––they also lead to errors that are common and in important in everyday life.

The punchline? The human judgment system is a surprisingly irrational inference machine.

(1000 words left)

The Challenge

The contrast between these two narratives is striking––all the more so since the basic contours of the problems are so similar.

I think we can use this contrast to buttress an ”evolutionary-optimality argument” in favor of rational explanations. (The gist of such arguments are far from original to me––they often help motivate the “rational analysis” approach to cognitive science that has been gaining momentum in recent decades.)

The argument starts with Quine’s famous quip: “Creatures inveterately wrong in their inductions [i.e. judgments based on limited evidence] have a pathetic but praiseworthy tendency to die before reproducing their kind” (Quine 1970, 13). In other words, we have straightforward evolutionary reasons to think that people must make near-optimal use of their limited evidence in forming their beliefs––for otherwise their ancestors would easily have been outcompeted by others who made better use of the evidence.

Of course, that grand conclusion is both too grand and too quick. There are several reasons the argument could go wrong––here are three important ones:

Perhaps the mistakes people make are not important enough to be corrected by evolutionary pressure.

Perhaps it was too difficult for evolution to hit upon reasoning methods that avoided these mistakes.

Perhaps the reasoning methods people use were near-optimal in our evolutionary past, but are no longer effective today.

My Claim: Although these are all legitimate concerns, they become less compelling once we see the contrast between the narratives on vision and judgment.

First, since few defenders of irrationalist explanations will say that the errors they’ve identified are uncommon or unimportant, option (1) is a unpromising strategy. After all, much of the interest in this research (and the way it gets its funding!) comes from the fact that the reverse is supposed to be true. Some representative quotes:

“What would I eliminate if I had a magic wand? Overconfidence.” (Kahneman 2015)

“No problem in judgment and decision-making is more prevalent and more potentially catastrophic than overconfidence” (Plous 1993, 213).

“If one were to attempt to identify a single problematic aspect of human reasoning that deserves attention above all others, the confirmation bias would have to be among the candidates for consideration... it appears to be sufficiently strong and pervasive that one is led to wonder whether the bias, by itself, might account for a significant fraction of the disputes, altercations, and misunderstandings that occur among individuals, groups, and nations.” (Nickerson 1998, 175)

What about option (2)––the proposal that rational Bayesian solutions to the problems faced by the judgment system were too difficult for evolution to hit upon? The narrative from vision science casts doubt on this: the visual system has hit upon (approximations of) the rational Bayesian solutions, suggesting that such solutions were evolutionarily accessible.

Of course, there is much more to be said about potential asymmetries between the evolutionary pressures on the visual and judgment systems––e.g. in terms of time-scales (how long have these systems been under such pressure?) or domain complexity (are optimal solutions harder to hit for judgment than for vision (Fodor 1983)?––Tyler Brooke-Wilson has some interesting work on this). But that way lies the sequence of resistance to resistance to resistance… which none of us have time for. The simple point that Bayesian solutions were hit upon in one domain suggests—at the least––that they are more evolutionarily accessible than we might have thought.

What about option (3)––the claim that our judgment-forming methods were near-optimal in the evolutionary past, but not today? This may be the best response for the defender of irrationalist narratives, but it has at least two problems.

First, we are not talking about the optimality of specific methods of solving specific problems––everyone agrees that humans are not optimal at performing long division. What we are talking about is the basic architecture of how people represent and reason with uncertainty. Is it (a) a broadly Bayesian picture, assigning probabilities to outcomes and responding to new evidence by updating those probabilities in a rational way, or (b) a heuristics-driven picture, governed by simple rules-of-thumb that systematically ignore evidence, are easily affected by desires, and so on? There is a general framework for reasoning rationally under uncertainty––that’s the point of Bayesian epistemology. So the question is why evolution would’ve hit upon this framework in one specific domain (vision), but entirely missed it in another (judgment).

Second, option (3) relies on the claim that today there is a radical break from our evolutionary past not only in the problems we face, but also in the types of reasoning that is helpful for solving those problems. The claim must be that the simple heuristics that lead to rampant mistakes today were serviceable (in fact, near-optimal) in our evolutionary history––that people who used such heuristics would not have been outcompeted by people who reasoned better.

That claim is questionable. The problems faced by a forager looking for food or a hunter tracking prey while avoiding predators are different in substance but not in subtlety from a politician looking for a catchy slogan or a millennial writing an eye-catching tweet while avoiding saying something politically unacceptable. Why would the reasoning mechanisms that lead to systematic, pervasive, important, and silly errors in the latter domains (“form a belief based on a single piece of evidence”, “ignore counter-evidence”, etc.) be perfectly serviceable––in fact, near-optimal––in the former?

Upshot: the defender of irrationalist narratives is left with a genuine challenge: since evolution has found such ingenious solutions to the problem of seeing, why has it landed on such inept solutions to the problem of believing?

That challenge provides reason to doubt that our belief-forming mechanisms are really so inept after all––to go back to that list of 200-or-so cognitive biases with an open mind. As we’ll see, we can offer rational explanations of many of them. I think we should take those explanations seriously.

What next?

If you want to see some of these rational alternative explanations, see this post on overconfidence or this post on confirmation bias and polarization.

If you want to learn more about “rational analysis” as an approach to cognitive science, chapters 1 and 6 of John Anderson’s The Adaptive Character of Thought are the classic statements of the approach. For summaries of the latest research, see this 2011 article in Science or this TED talk by MacArthur-“genius”-grant-recipient Josh Tenenbaum.

PS. Thanks to Dejan Draschkow for literature suggestions and Tyler Brooke-Wilson for helpful feedback on an earlier draft of this post.