Trump won.

Within hours, the pundits had come out.

They proposed diagnoses of why he won: institutional failures, cultural backlash, big money, political unoriginality, or luck.

They pointed to mistakes: Biden shouldn’t have run again, Harris should’ve gone on Joe Rogan, the Democrats should’ve proposed a clearer vision, and so on.

They claimed it was predictable: they said they weren’t surprised, or even that they predicted it. (Them too.)

Soon after (or before!), the charges of hindsight bias started flying:

Of course, Williams is right that people have no ability to predict close elections—in contexts like this, expert forecasts are worse than chance. And he’s right that no one should be certain of the causes of complex, chaotic events like this.

Moreover, this line of reasoning is certainly tempting: if after the fact people can correctly diagnose the causes, or identify mistakes, or claim to have predicted it, why aren’t their predictions better? Should we dismiss post-election punditry?

No.

The pundits are, more or less, acting reasonably. The fact that Trump won is evidence in favor of the claims they’re making. As long as they’re making educated guesses—and not pretending that they’re certain—we shouldn’t dismiss them as hindsight bias.

In fact, doing so undercuts our ability to learn. So-called “hindsight bias” is how we figure out what went wrong, and how to do better next time. We should welcome it.

Three grades of punditry

“Hindsight bias”—aka the “I-knew-it-all-along” effect—is a cluster of related tendencies that people exhibit when they learn that some uncertain outcome happened:

They become more confident in their diagnoses of what caused it.

They become more confident that people should’ve seen the outcome coming, and acted accordingly; and

They sometimes become more confident that they predicted the outcome.

People exhibit all of these trends in the lab. And pundits exhibit them in the wild.

(1) is proposing diagnoses. (2) is pointing to mistakes. And (3) is saying they weren’t surprised.

Here’s the kicker: each of these tendencies is what rational people (“Bayesians”) would do in this situation.

Why?

In brief: things are evidence for things that are correlated with them. Seeing a coin land heads is evidence that it’s biased toward heads; seeing me eat lentils is evidence that I like lentils; and so on.

More generally: if X is a quantity that you think is correlated a possible outcome, then seeing the outcome happen should raise your estimate of X. (That’s a theorem.)

Now set the outcome to “Trump won”. Try out various Xs.

Let X be the amount that institutions have failed. That’s correlated with whether Trump would win—so, upon learning that he won, you should raise your estimate for the amount of institutional failure.

Let X be how much evidence there was that Trump would win. That’s correlated with whether Trump would win—so, upon learning that he won, you should raise your estimate for how much Democrats should’ve seen it coming, and (so) acted differently.

Let X be how much you expected Trump to win. That’s correlated with whether Trump would win—your beliefs are correlated with the truth, after all—so, upon learning that Trump won, you should raise your estimate for how much you expected it.

That was quick. Let’s go through this all a bit more carefully.

1) Proposing diagnoses is causal inference

Pundits have become more confident about the causes for Trump’s win—pointing to institutional failures, big money, and the like.

This is good-old Bayesian updating about the objective probabilities in response to new evidence about them.

Consider an analogy. I’ve grabbed a coin that's either biased-heads or biased-tails from my bucket of biased coins. I’ll toss it once. How likely do you think it is to land heads?

You have no idea—you’re 50-50—since you have no idea which coin I grabbed. Your predictions about what will happen when I toss it are—like our expert forecasts—no better than chance.

But your post-hoc diagnoses are quite accurate. I just flipped it, and it landed heads. Why do you think it landed heads? Obvious answer: because it was biased-heads. Although you can’t be confident of the outcome or its cause beforehand, learning the outcome gives you more information, helping you pinpoint the cause.

The same thing is happening when pundits point to (say) institutional failures as a cause of Trump’s election.

We’ve known that institutional failures were driving some people to like Trump. The question we were uncertain about is (let’s say, for simplicity) whether the effect was Big or Small. Before the election, we were unsure—so our estimate for the causal impact of institutional failures was middling.

But here’s something we knew: if the effect is Big, then Trump’s likely to win; and if the effect is Small, Trump’s not as likely to win.

Then we learn that Trump won. Things are evidence for things that make them likely. So his win is evidence that the effects of institutional failures were Big.

Let the op-eds rip.

2) Pointing out mistakes is evidential inference

Pundits have also become more confident that the Biden/Harris campaigns should’ve seen this coming, and acted differently. The finger-pointing has begun.

This is Bayesian updating about what evidence people had—about how likely their evidence made the outcome—in response to observing the outcome.

Consider an analogy. I just booked a plane ticket. The flight might be to Florida, or to somewhere else. And my brother might currently have evidence that I’ll go to Florida, or he might not. (You’re unsure.) How likely do you think it is that I’ll go to Florida? And how much evidence do you suspect my brother has that I’ll go to Florida?

You have no idea, obviously—about either where I’ll go, or what my brother’s evidence suggests about where I’ll go. Your predictions are no better than our good-for-nothing expert forecasters.

But your post-hoc assessments of what evidence my brother had are quite accurate.

It turns out that I am going to Florida. Given that information, how much evidence do you think my brother had that I’m going to Florida—i.e., how much do you think he should predict it? Probably quite a bit.

My brother’s probably more informed than you. So learning that I’m going to Florida gives you reason to think that I’ve told my brother, or he’s heard from my parents, or he otherwise should expect it (maybe I go to Florida every year). And you’d be right.

The same thing is happening when pundits point to (say) Harris’s refusal to go on Joe Rogan’s podcast as a strategic mistake.

Harris concluded that avoiding Joe Rogan wouldn’t hurt her. Was that decision rational, or was it a mistake—did she have good evidence that it would hurt her?

Of course, we were (and are) unsure—we don’t know what evidence her campaign had. But here’s something we did know: if she did have evidence that it would hurt her, it’s more likely she’d lose; and if she didn’t have evidence that it would hurt her, it’s less likely she’d lose. (Evidence is correlated with truth, after all.)

Well, she did lose. And things are evidence for things that make them likely. So the fact that she lost makes it more likely that she had evidence that avoiding the podcast would hurt her. And that, in turn, makes it more likely that she was making a mistake.

Let the blame-games commence.

3) Retrodiction is belief inference

Pundits have, at least in some cases, become more confident that they predicted that Trump would win, after he won.

This is often the result of Bayesian updating about what your past-self thought.

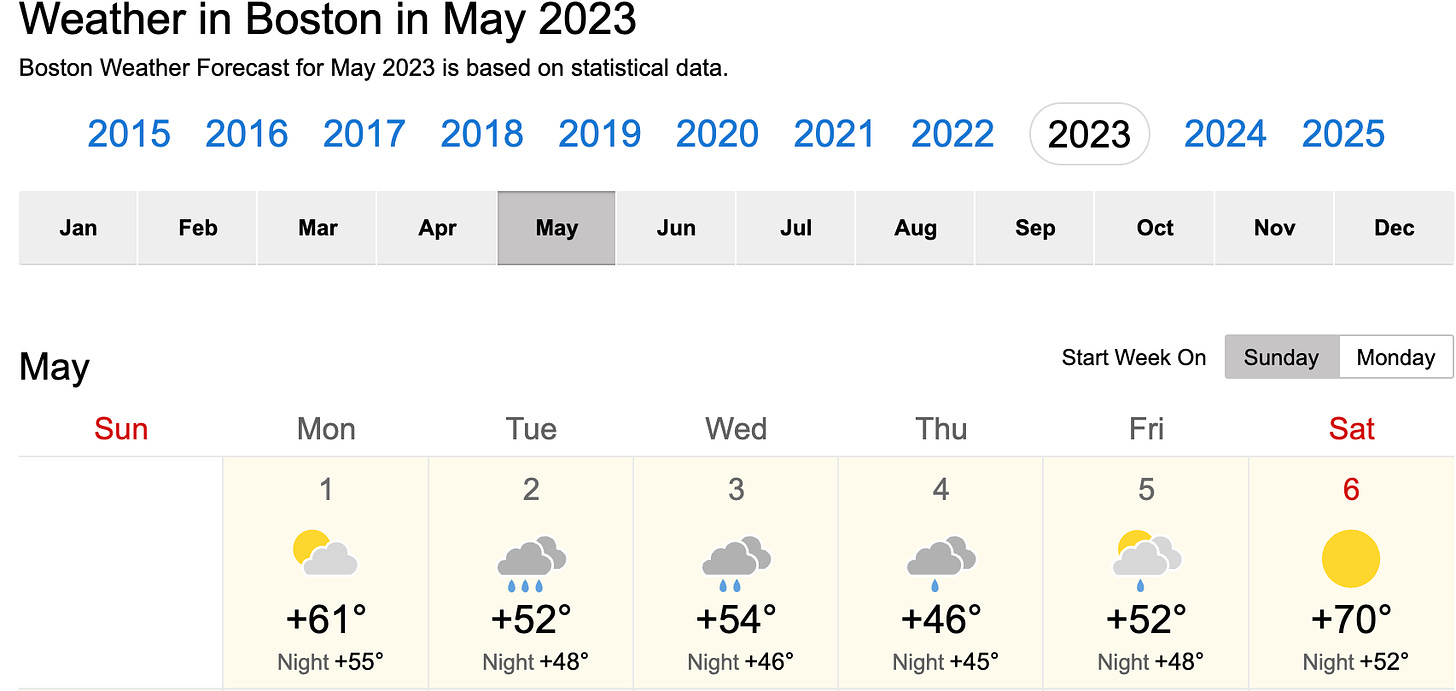

Consider an analogy. I don’t remember whether it rained in Boston on May 5, 2023. I also don’t remember whether, on May 4th, 2023, I predicted that it would rain the following day.

But let’s look it up… It looks like it did rain in Boston on May 5, 2023.

In light of that, I’m now quite confident that I did predict, the day before, that it would rain—after all, I was in Boston, and I check the weather every day.

When you don’t remember (exactly) whether you predicted rain—or exactly how confident you were in that prediction—then so long as you trust your judgment, learning that is rained is evidence that you did predict it.

The same thing is happening when pundits (like Ross Douthat) claim that the election played out how they predicted.

More carefully: Douthat did predict that Trump would win. But what did he predict, exactly? Did he have in mind that Trump would win in a huge red shift across the country, with large gains among black and hispanic voters?

We’re not sure. And, presumably, even he’s not sure. He didn’t spell out in every exact detail of his predictions. And—more than that—he didn’t say how confident he was in each particular component of those predictions.

To radically simplify, suppose Ross remembers that he said, “I think Trump will have a good night”. Now here he is, on election day, wondering exactly what he was thinking when he made that prediction. Perhaps he was thinking that Trump would have a Decisive Win, or perhaps he was thinking that Trump would have an Electoral-College Win.

He’s unsure. But he trusts his judgment: if his past-self thought that Trump would have a Decisive Win, that makes it more likely that Trump would have a decisive win; if his past-self thought that Trump would (merely) have an Electoral-College Win, that makes it less likely that Trump would have a decisive win.

Then Ross learns that Trump had a decisive win. Things are evidence for things that make them likely. So that’s evidence that when he made his prediction, he thought Trump would have a Decisive Win.

He meditates on this fact as he goes into the recording studio…np

So What?

All of these tendencies—to (1) propose diagnoses, (2) point to mistakes, and (3) claim it was predictable—are reasonable. In fact: if people did not have these tendencies to at least some extent, then that would be irrational—it’d be a stubborn refusal to update your beliefs based on new evidence.

That’s not to say that these conclusions are correct. Nor is it to say that pundits never overdo it.

To say that Trump’s election provides evidence in favor of a diagnosis (mistake, prediction…) is to say that it raises the probability—not that it makes the probability certain, or even very high. So Williams and company are right to warn against a false sense of certainty about why Trump won, who’s to blame, etc. The reasonable attitude in response to a surprising result is one of curiosity and humility.

Still, I doubt that many of those pundits are that confident in their punditry. It’s the job of pundits (and Substack writers!) to have “interesting takes”—which involves a tradeoff between being accurate (saying something that’s true) and being informative (saying something that’s big-if-true).

At times like this, we want them to make that tradeoff. We want them to raise hypotheses that are interesting but uncertain so that we can consider them, search for evidence about them, and try to get to the bottom of things.

If we want the truth to out, we should let the pundits be pundits.